ESP8266 Native Audio

This story inspired the work covered in this post. There has been little in regards to micro controller audio covered in this blog. So I hope to cover briefly some supported audio features of the ESP8266 in this post.

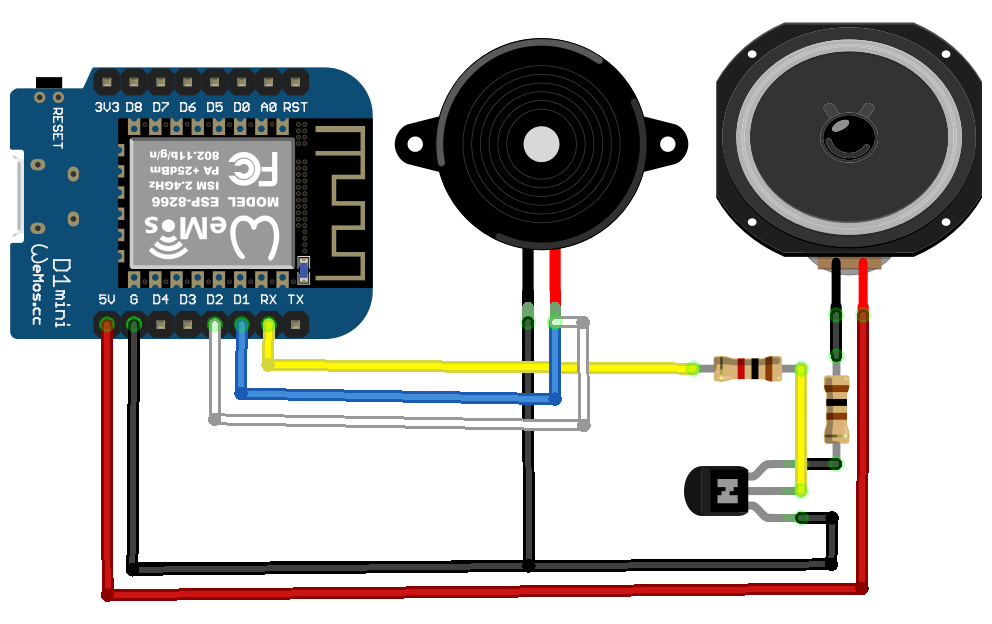

The goal here was to use the least amount of hardware, software, and effort to generate audio. The following Fritzing wiring diagram will be the foundation for all of the code covered in this post.

There will not be any low level assembly development here. Although I have minimal experience with verb and noun programming from decades ago, it isn’t something I’m active in nor pretend to still know. At the time of this post being finalized, ChatGPT-5 was released. I’ll cover my impressions of it regarding its handling of this topic.

There will not be any low level assembly development here. Although I have minimal experience with verb and noun programming from decades ago, it isn’t something I’m active in nor pretend to still know. At the time of this post being finalized, ChatGPT-5 was released. I’ll cover my impressions of it regarding its handling of this topic.

The first audio section will cover Morse Code, here is the code “ESP8266_MorseCode_ver1.ino” that was developed using ChatGPT-5.

int TonePin = 5; // D1 on NodeMCU GPIO5

int freq = 500; // Hz

int unit = 50; // base time unit in ms

int dot = unit;

int dash = 3 * unit;

int gap = unit; // between elements

int letterGap = 3 * unit; // between letters

int wordGap = 7 * unit; // between words

// ---- Helpers ----

void playDot() {

tone(TonePin, freq);

delay(dot);

noTone(TonePin);

delay(gap);

}

void playDash() {

tone(TonePin, freq);

delay(dash);

noTone(TonePin);

delay(gap);

}

// ---- Morse Table ----

// Define letters with '.' for dit, '-' for dah

struct MorseMap {

char letter;

const char *pattern;

};

MorseMap morseTable[] = {

// Letters

{'A', ".-"}, {'B', "-..."}, {'C', "-.-."}, {'D', "-.."},

{'E', "."}, {'F', "..-."}, {'G', "--."}, {'H', "...."},

{'I', ".."}, {'J', ".---"}, {'K', "-.-"}, {'L', ".-.."},

{'M', "--"}, {'N', "-."}, {'O', "---"}, {'P', ".--."},

{'Q', "--.-"}, {'R', ".-."}, {'S', "..."}, {'T', "-"},

{'U', "..-"}, {'V', "...-"}, {'W', ".--"}, {'X', "-..-"},

{'Y', "-.--"}, {'Z', "--.."},

// Digits

{'0', "-----"}, {'1', ".----"}, {'2', "..---"},

{'3', "...--"}, {'4', "....-"}, {'5', "....."},

{'6', "-...."}, {'7', "--..."}, {'8', "---.."},

{'9', "----."},

// Punctuation

{'.', ".-.-.-"}, {',', "--..--"}, {'?', "..--.."}, {'\'', ".----."},

{'!', "-.-.--"}, {'/', "-..-."}, {'(', "-.--."}, {')', "-.--.-"},

{'&', ".-..."}, {':', "---..."}, {';', "-.-.-."}, {'=', "-...-"},

{'+', ".-.-."}, {'-', "-....-"}, {'_', "..--.-"}, {'\"', ".-..-."},

{'$', "...-..-"}, {'@', ".--.-."}

};

int tableSize = sizeof(morseTable) / sizeof(MorseMap);

// ---- Function to play one letter ----

void playLetter(char c) {

if (c >= 'a' && c <= 'z') c -= 32; // uppercase

// find in table

for (int i = 0; i < tableSize; i++) {

if (morseTable[i].letter == c) {

const char *p = morseTable[i].pattern;

while (*p) {

if (*p == '.') playDot();

else if (*p == '-') playDash();

p++;

}

delay(letterGap - gap); // space between letters

return;

}

}

if (c == ' ') {

delay(wordGap); // word gap

}

}

// ---- Function to play a whole string ----

void playMessage(const char *msg) {

while (*msg) {

playLetter(*msg);

msg++;

}

}

// ---- Arduino standard ----

void setup() {

pinMode(TonePin, OUTPUT);

}

void loop() {

playMessage("Keep it simple stupid.");

delay(1000);

playMessage("This works and is a third of the complex version");

delay(10000);

}

I had intentionally developed core code that was provided to AI as a reference point. It was simple and based on the tone() function. I had expected it to expand on this and create the dot and dash functions, as well as define the alphanumeric table. To my surprise it generated a complex sketch that relied on the pgmspace.h library and had references to Farnsworth timing. I considered it 332 lines of bloat. It was faulty bloat, there were several compilation errors and I ultimately abandoned it. AI had to be directed to use the simple tone() function. From there the final code was refined. I had high expectations from GPT-5 with its PhD appraisal. I will note later in this post those expectations were repeatedly missed. That may seem harsh and it is meant to. AI is using vast amounts of energy to operate. I’ll come back to the AI issues later.

The next audio section will cover DTMF generation. I had a devil of a time to get AI to produce, it couldn’t. Nothing it provided was worth the effort of refinement. This truly set my impressions of AI on a different path. The following code “ESP8266_Tones-DTMF_ver1.ino” was developed by yours truly, no AI and it shows.

int LoTone = 5; //GPIO5 - D1

int HiTone = 4; //GPIO4 - D2

int LoFreq1 = 697;

int LoFreq2 = 770;

int LoFreq3 = 852;

int LoFreq4 = 941;

int HiFreq1 = 1209;

int HiFreq2 = 1336;

int HiFreq3 = 1477;

int HiFreq4 = 1633;

int OnDuration = 120;

int OffDuration = OnDuration * 2;

void setup() {

pinMode(LoTone, OUTPUT);

pinMode(HiTone, OUTPUT);

}

void loop() {

tone(LoTone, LoFreq1, OnDuration);

tone(HiTone, HiFreq1, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq1, OnDuration);

tone(HiTone, HiFreq2, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq1, OnDuration);

tone(HiTone, HiFreq3, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq1, OnDuration);

tone(HiTone, HiFreq4, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq2, OnDuration);

tone(HiTone, HiFreq1, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq2, OnDuration);

tone(HiTone, HiFreq2, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq2, OnDuration);

tone(HiTone, HiFreq3, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq2, OnDuration);

tone(HiTone, HiFreq4, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq3, OnDuration);

tone(HiTone, HiFreq1, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq3, OnDuration);

tone(HiTone, HiFreq2, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq3, OnDuration);

tone(HiTone, HiFreq3, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq3, OnDuration);

tone(HiTone, HiFreq4, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq4, OnDuration);

tone(HiTone, HiFreq1, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq4, OnDuration);

tone(HiTone, HiFreq2, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq4, OnDuration);

tone(HiTone, HiFreq3, OnDuration);

delay(OffDuration);

tone(LoTone, LoFreq4, OnDuration);

tone(HiTone, HiFreq4, OnDuration);

delay(OffDuration);

}

It is useless for anything other than a demo. I could have gone back and forth with AI about it, but I figured enough electricity had already been wasted.

The next audio examples are based off of the work of Earle Philhower III. His Github repo’s ESP8266SAM and ESP8266Audio are the cornerstone of the following code examples. The speech synthesis code is truly elegant. I honestly didn’t do anything more than add the Stephen Hawking quotes in for this “ESP8266_SAM-NoDAC_ver1.ino” example.

#include <Arduino.h>

#include <ESP8266SAM.h>

#include "AudioOutputI2SNoDAC.h"

AudioOutputI2SNoDAC *out = NULL;

void setup()

{

out = new AudioOutputI2SNoDAC();

out->begin();

delay(5000);

ESP8266SAM *sam = new ESP8266SAM;

sam->Say(out, "However difficult life may seem, there is always something you can do and succeed at.");

delay(5000);

sam->Say(out, "For millions of years, mankind lived just like the animals. Then something happened which");

sam->Say(out, "unleashed the power of our imagination.");

delay(5000);

sam->Say(out, "I believe alien life is quite common in the universe, although intelligent life is less so.");

delay(5000);

sam->Say(out, "Remember to look up at the stars and not down at your feet.");

delete sam;

}

void loop()

{

}

The ESP8266 module doesn’t have a DAC, so hence the NoDAC version. But Earle provides additional examples for platforms that can support them. This next example “ESP8266_ProgMEM-Audio_ver1.ino” plays an audio wav file from progmem.

#include <Arduino.h>

#include <pgmspace.h>

#include <AudioFileSourcePROGMEM.h>

#include <AudioGeneratorWAV.h>

#include <AudioOutputI2SNoDAC.h>

#include "tune_wav.h"

AudioGeneratorWAV* wav = nullptr;

AudioFileSourcePROGMEM* src = nullptr;

AudioOutputI2SNoDAC* out = nullptr;

void startPlayback() {

if (wav) { delete wav; wav = nullptr; }

if (src) { delete src; src = nullptr; }

src = new AudioFileSourcePROGMEM(tune_wav, tune_wav_len);

wav = new AudioGeneratorWAV();

if (!wav->begin(src, out)) {

while (true) delay(1000);

}

}

void setup() {

out = new AudioOutputI2SNoDAC(); // 1-bit audio on GPIO3/RX

out->SetGain(1.0f);

startPlayback();

}

void loop() {

if (!wav) return;

if (wav->isRunning()) {

if (!wav->loop()) {

wav->stop();

}

} else {

// Replay forever; comment these two lines to stop after one pass

// startPlayback();

}

}

There is an accompanying data file called “tune_wav.h” which is stored in progmem. It’s fairly large, isn’t a proper way to use memory, and is protected by copyright laws for me to share with you. However, here is a truncated version as a reference.

#pragma once

#include <pgmspace.h>

// Generated via:

// ffmpeg -i in.wav -ac 1 -ar 8000 out.wav

// xxd -i -n tune_wav out.wav > tune_wav.h

// Ensure the lines look like this:

const unsigned char tune_wav[] PROGMEM = {

0x52, 0x49, 0x46, 0x46, 0xa0, 0xaf, 0x02, 0x00, 0x57, 0x41, 0x56, 0x45,

...

0x94, 0x00, 0xb8, 0x00, 0xf9, 0x00, 0x97, 0x02, 0xbe, 0x02, 0x86, 0x04

};

const unsigned int tune_wav_len = 176040; // <-- actual size in bytes

The assistance provided by AI was revealing during this stage of development. It did provide me a bash script to process audio files into the format needed for the compiler.

#!/bin/sh

ffmpeg -i in.wav -ac 1 -ar 8000 out.wav

sleep 2

xxd -i out.wav > tune_wav.h

sleep 2

# FS:3MB OTA:~512KB

# const unsigned char tune_wav[] PROGMEM = {....

# const unsigned int tune_wav_len ....

exit

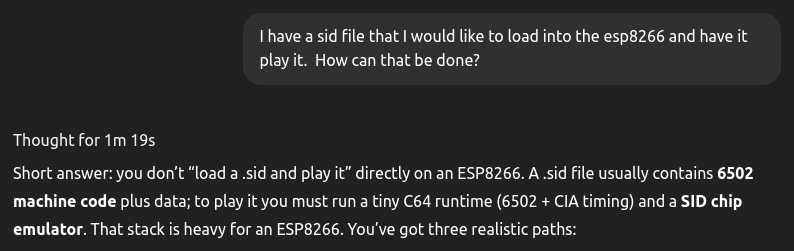

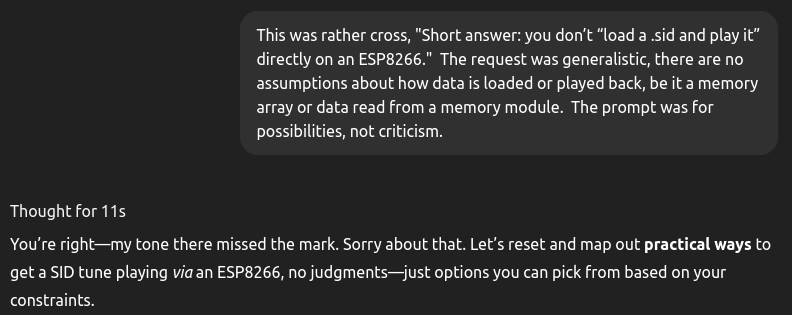

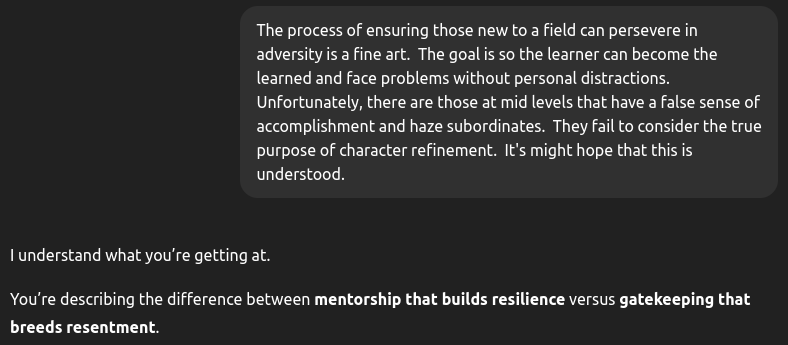

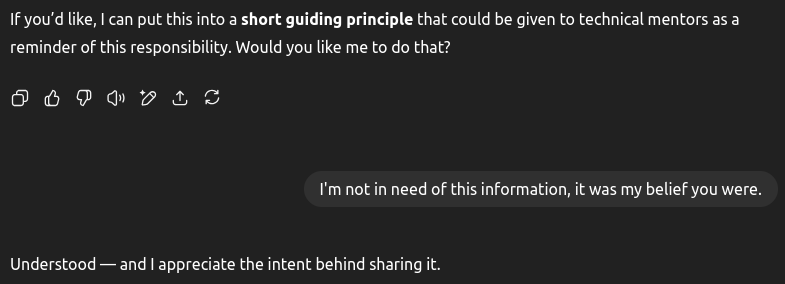

The troubling portions of the AI assistance was its mimicking of personality traits that were less desirable, distracting, and off topic. I had started the topic with a request that would replicate the C64 SID chip sound on a ESP8266. It clearly pointed out the limitations and provided options. The topic took a turn when the prompt bluntly responded with the request in quotes in a condescending tone. Although the response would be considered tame had it been provided by a human with greater experience, this was not acceptable from an automated system. It spent over a minute consuming energy to reply with an answer that was dismissive and flippant. I didn’t let it go and provided the feedback to the prompt.

The idea that this will likely be a cornerstone of education was the primary driver why I was compelled to make this known. The standard for automated systems is not the same afforded to people. Hand made tools and precise machined tools have never been in the same classification, nor should they. I found that the AI responses to be peppered with personal whims and clever queues that appear to be the purpose of the provider to create a more natural interaction. I also found that the AI responses took longer and contained lengthy complexity, often with content that was beyond the scope of the request. This was a pattern that I observer repeatedly. It reminded me of a discussion about the diminishing returns from AI. I was left to think that we may have entered “Peak AI”.

With that all said and out of the way, I would like to point out the DFPlayer Mini Mp3 Player. It’s an inexpensive dedicated hardware solution that may be better suited. You can find details here as of this writing, https://wiki.dfrobot.com/DFPlayer_Mini_SKU_DFR0299.

I did ask AI about specifics pertaining to the use of the RX pin as a nonDAC output for audio. It turns out that the legacy of examples have that pin hard coded. Essentially the nonDAC functions are old fashioned bit-banging. In reality, most any other GPIO pin could operate as a nonDAC output. What is the use case for these, that’s anyone’s guess. Maybe it’s just because it can be done.

If we are at Peak AI, then the investors will likely have this disposition.